Scaffold

2025

Role

Product Designer

Responsibilities

Conceptual exploration of ethical AI/UX patterns prioritising transparency, agency, and trust

Key Focus

• How existing AI/UX principles apply to a new synthesis tools

• Designing for transparency - showing AI reasoning and confidence levels

• Creating validation points that keep users in control

• Balancing AI pattern recognition with human judgment

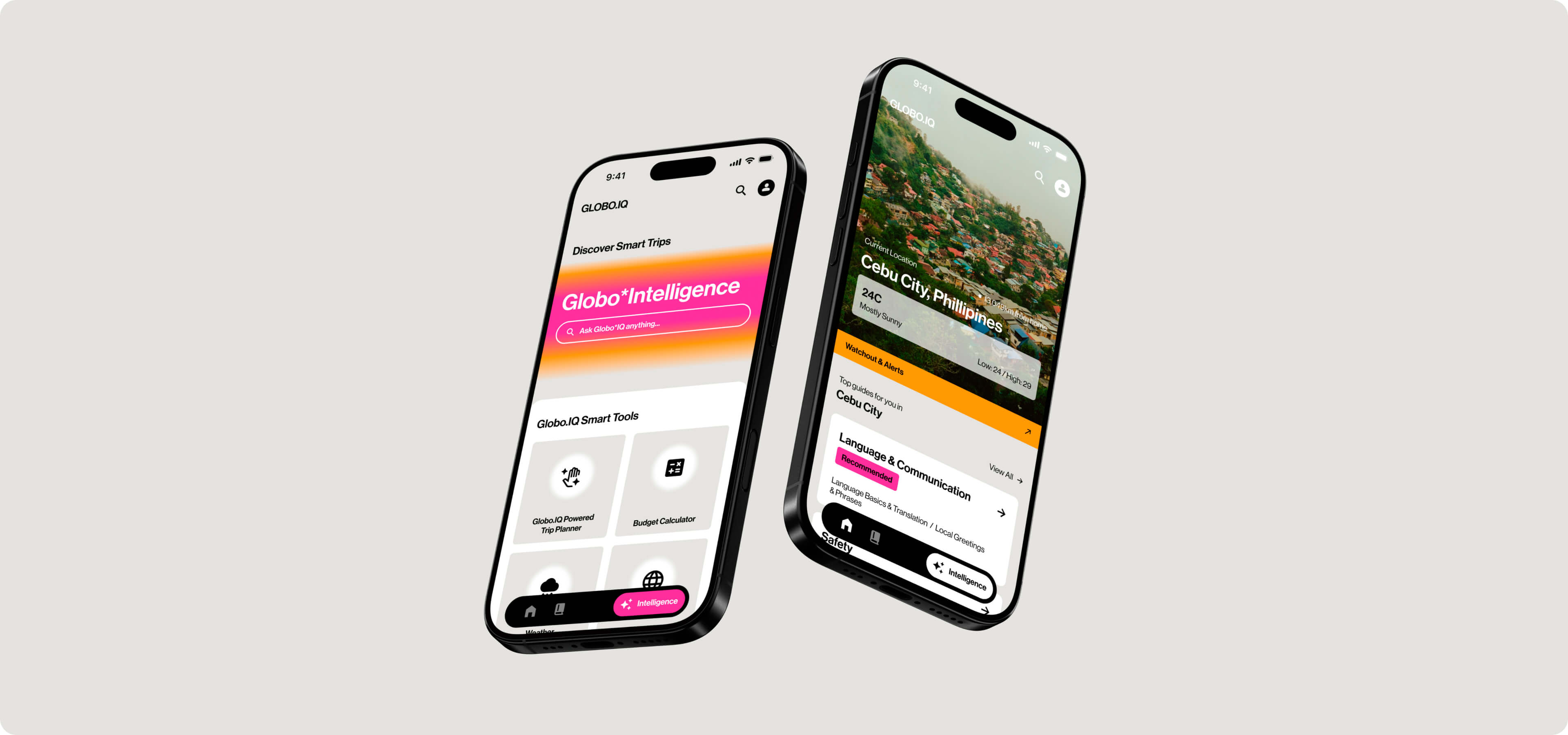

Globo.IQ

AI-powered travel app that keeps you travel-ready at anytime

Exploring trustworthy AI design: Applying principles to stakeholder synthesis

Scaffold is a conceptual tool I used to test how transparency and validation patterns might maintain human control while accelerating this workflow.

(01)

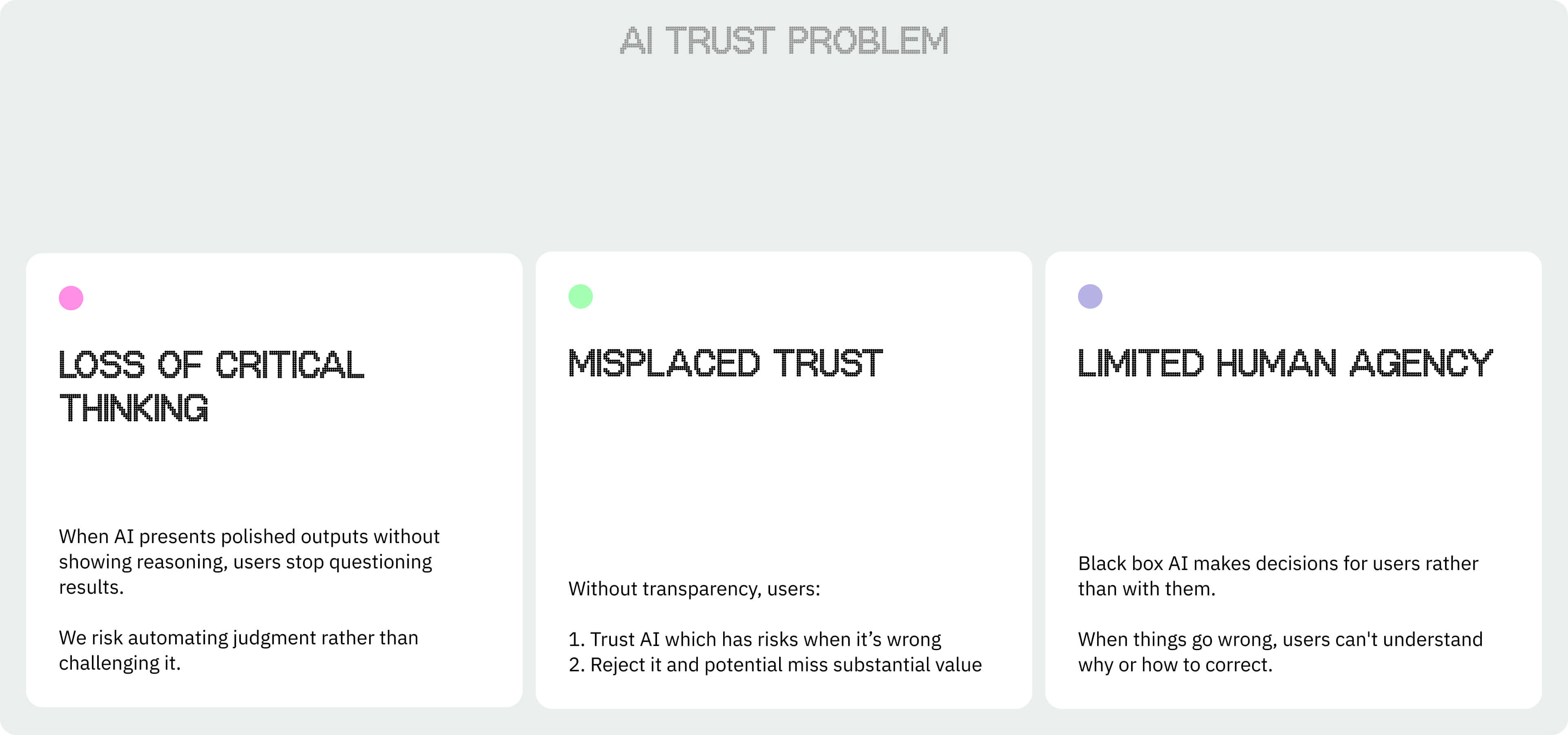

The AI Trust Problem

AI tools are increasingly making more important decisions in our work - from writing to analysis to recommendations.

But most AI operates as a black box: users see outputs without understanding how AI reached conclusions, what it's confident about, or where it might be wrong.

(02)

AI/UX Principles

Application

From using AI tools, observations and research, I identified areas I wanted to explore through my design practice.

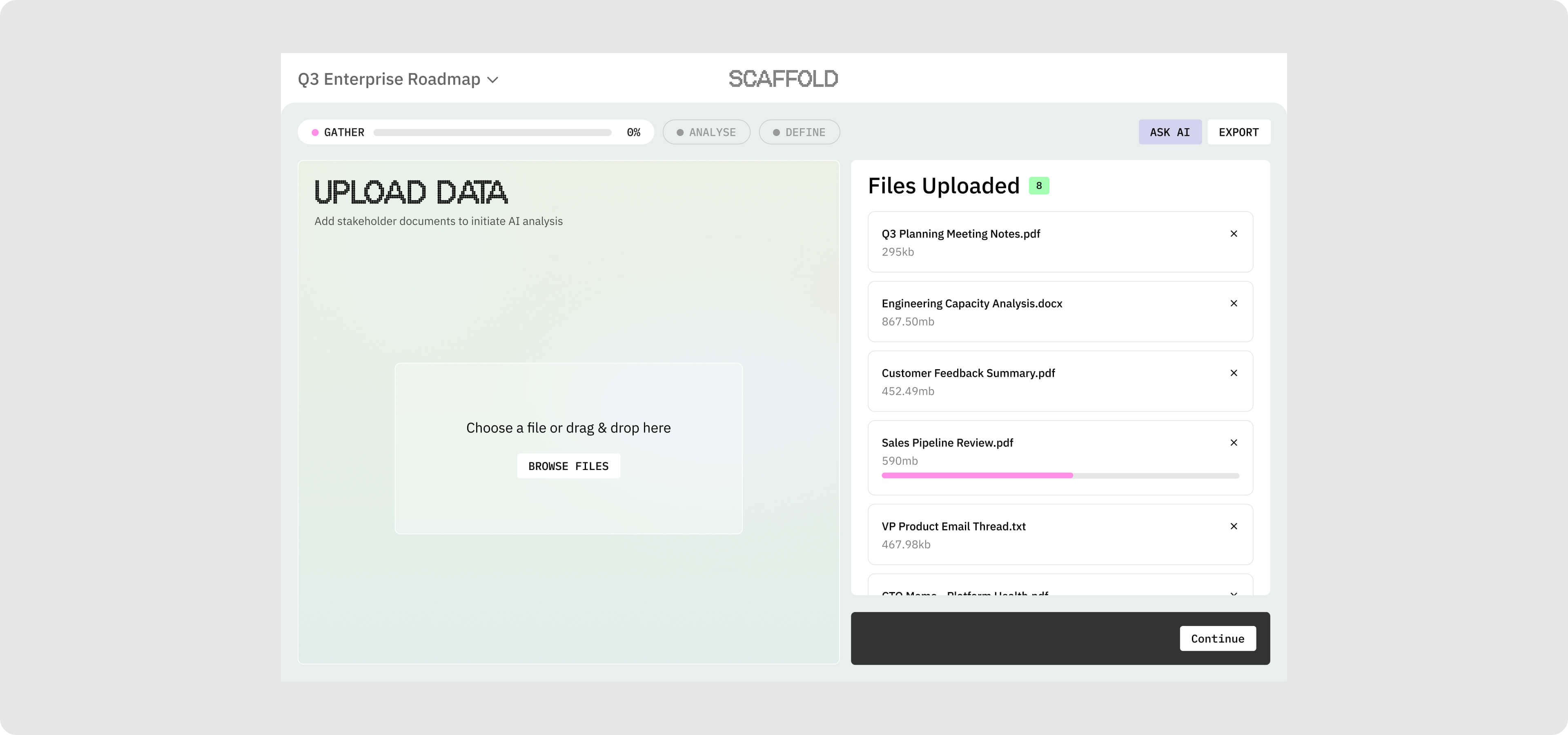

Rather than building a production tool, I focused on testing how these principles might translate to real design decisions through a conceptual stakeholder synthesis tool called Scaffold.

.jpg)

(03)

Applying Principles

Context

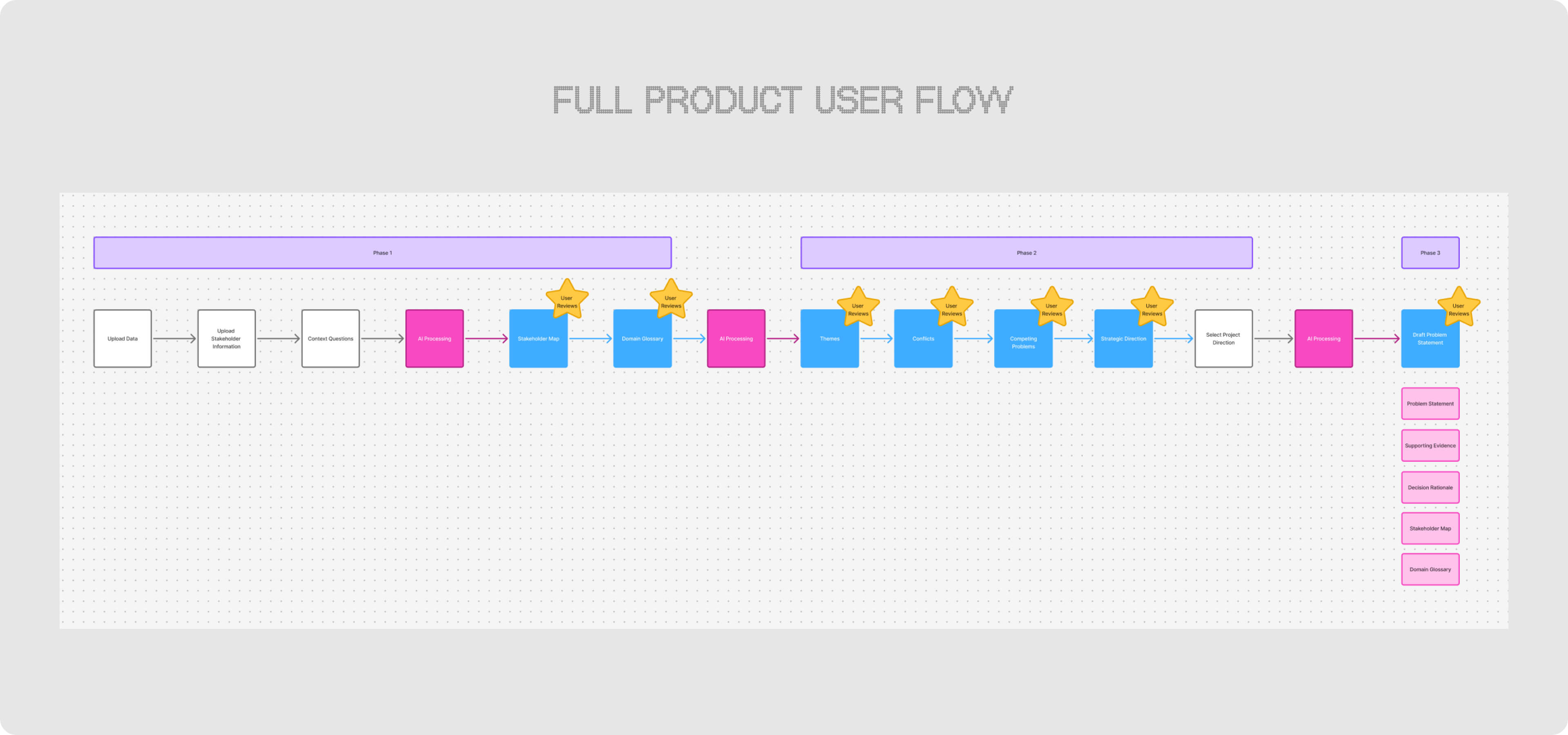

Scaffold shows how AI can accelerate stakeholder synthesis from weeks to minutes without removing human judgment through transparent, validated collaboration.

This conceptual tool let me practice applying transparency and validation principles to a real design problem I've experienced.

(04)

Principles Demonstrated Through Design

Transparency over black boxes

The Problem

AI that isn't explicit with its reasoning which can make user trust challenging. They aren't able to validate outputs or understand where AI might be wrong.

The Principle

Be clear with AI reasoning, confidence levels, and evidence at every decision point so users can verify claims and maintain appropriate doubt.

Implementation in Scaffold

• Confidence scores

• Evidence including number of mentions, quotes, and direct from source materials

• Validation required - user reviews before AI proceeds

(04)

Principles Demonstrated Through Design

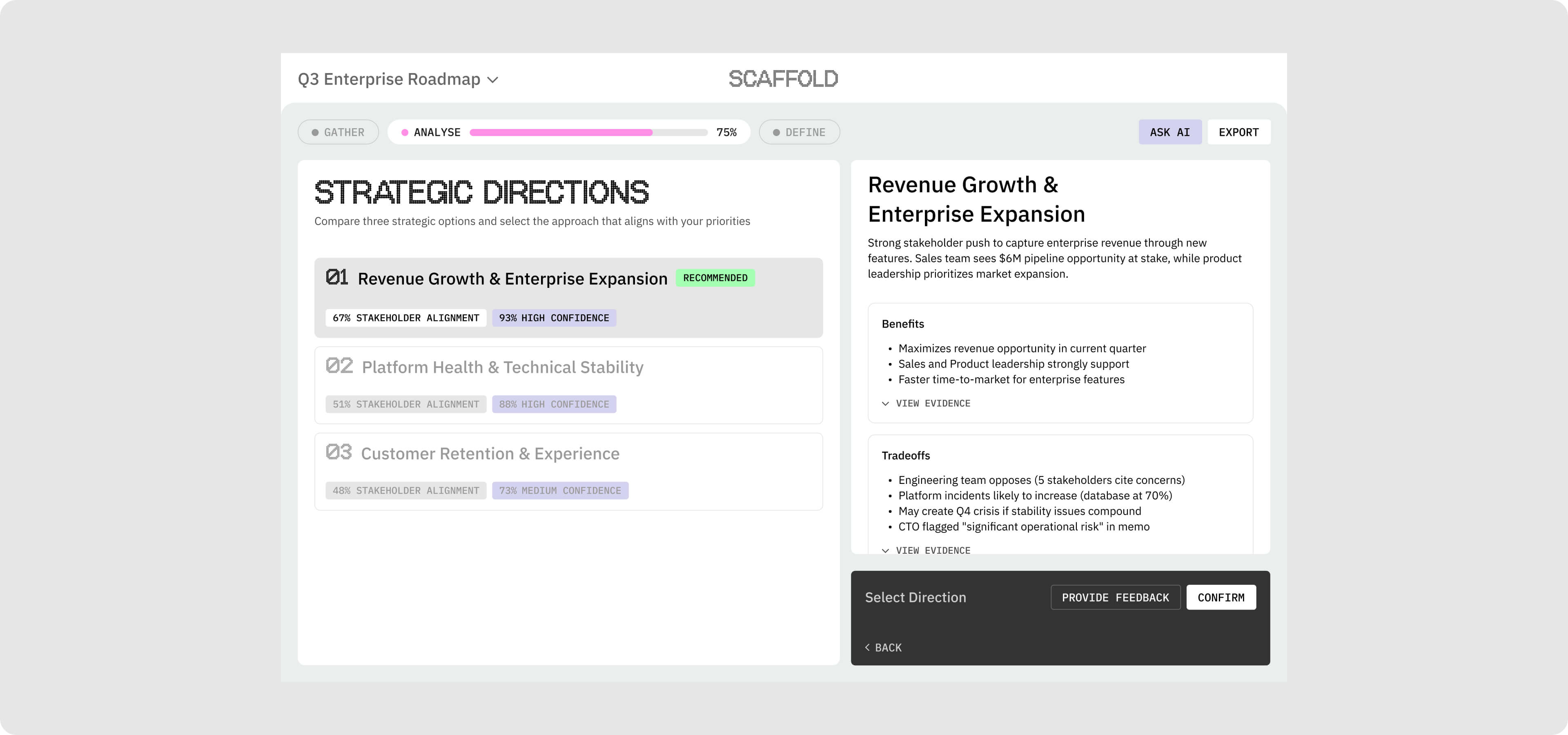

Collaborative intelligence over automation

The Problem

AI is designed to replace human work which removes the thinking that makes the work valuable. This leads to an over-reliance on potential inaccurate recommendations.

The Principle

AI handles pattern recognition whilst users make judgment calls.

Implementation in Scaffold

• AI generates strategic options with explicit varying levels of confidence

• Each option shows percentage of stakeholder, pros/cons, trade-offs

• AI recommends whilst user compares and decides

• AI never auto-selects or advances without the user decisions

(05)

Design Decisions & Ethical Trade-offs

Trade-offs Explored

Transparency vs. Simplicity

Progressive disclosure of information allows for a balances between summaries for scanning and expandable details for validation

Speed vs. Agency

Chose not to auto select the option with highest confidence, the user always chooses the strategic direction to maintain control

Confidence Communication

Maintained transparency and honesty by keeping low confidence scores

Design scope: principles over production

This conceptual project let me practice applying AI/UX principles through essential workflows, not comprehensive features.

A production version would expand functionality, but designed around the same core principles.

(06)

Learnings

Transparency needs additional supporting behaviours

Through this project, I learned that transparency needs validation loops and confidence communication to be actionable - these principles work as a feedback system.

Restraint over feature overload

This exploration taught me that effective AI/UX comes from designing the minimum interactions that maintain human control, not exposing every capability the AI can perform.